Happy New Year! Freud x Barto

Ever the eclectic learner, I spent some time over the festive period reading about the various theories informing counselling practice. This is all new to me and should certainly be taken with a large pinch of salt!

In this post I present Freud’s tripartite model of the psyche and relate it to the anatomy of reinforcement-learning-based autonomous agents.

The Ego and the Id

In 1923 Sigmund Freud published The Ego and the Id [1] outlining his model of the psyche structured into three parts - the id, the ego, and the superego. They say a picture paints a thousand words so I created a Freudian CustomGPT and asked it to draw me an interpretation of Freud’s model:

Now that is quite something!? Can you guess which is which?

Freud proposed that personality is composed of three parts and that these parts are formed at different stages of development. From birth there is id, a primitive and instinctive force that strives for immediate gratification. Freud wrote that the id, by perception of unpleasure (thirst, hunger, tiredness, …), is guided by the pleasure principle towards a striving for satisfaction. The id is ‘‘the great reservoir of libido’’, it is unconscious, and it is non-moral.

Ego is the (ideally…) coherent organisation of mental processes through which we express agency and take decisions in the world around us. Ego is the great mediator between id and the external world. ‘‘The ego represents what may be called reason and common sense’’. Perception is to ego what instinct is to id. The ego is unconscious, preconscious and conscious; ego censors our dreams, and it disguises through repressions the painful conflicts between id and the real world.

The superego, believed by Freud to derive from early object-relations during childhood, gives permanent expression to the influence of the parents (yikes…). It ‘‘preserves throughout life … the capacity to stand apart from the ego and to master it’’. The ego is subservient to the superego as the child is to the parents. Analogously too the superego is influenced by forces that are unknown by the ego. The superego manifests itself essentially as criticism, causing feelings of guilt in the ego. It represents the internalisation of moral standards, informs our conscience, and opposes the infantile demands of the id.

It is worth emphasising that according to the tripartite model, the ego through which we perceive and act in the world, and to which consciousness is attached, is subject to three sources of conflict: the external world, the libidinal id and the judgmental superego. ‘‘The ego disguises id’s conflicts with reality and, if possible, its conflicts with the superego too’’.

So, is any of this remotely relevant from the perspective of reinforcement learning?

Freudian CustomGPT Prompt: Can you try to draw me an interpretation relating the tripartite model of the psyche to reinforcement learning?

Hmm a picture is worth a thousand words but it can’t contain any that are legible if you’re a 12 billion parameter text-to-image transformer model. Still, this is pretty cool! I particularly like the butterfly ego: butterflies are beautiful but fragile, just like your deep reinforcement learning agent.

Reinforcement Learning 101

(Feel free to skip this bit if you’re already familiar with reinforcement learning)

The reinforcement learning (RL) problem is about learning from interaction to achieve a goal. RL algorithms, and in particular Deep RL (DRL) methods, have demonstrated human and super-human levels of performance in broad range of problems including Chess, Go, classic Atari games, StarCraft, algorithm design, and nuclear fusion control. If that isn’t exciting enough, DRL is also used to fine tune large-language models such as ChatGPT to suit human preferences.

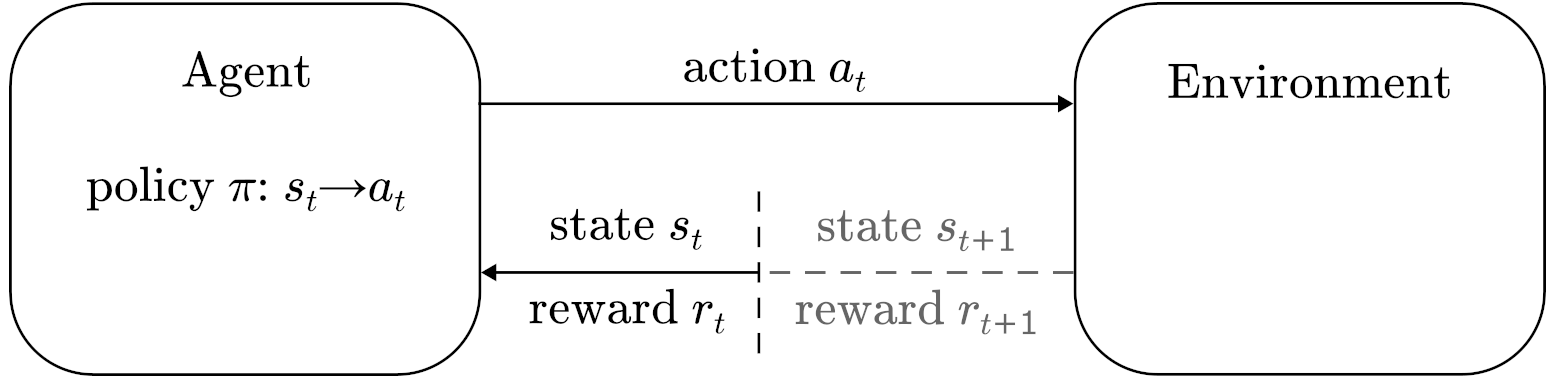

In the RL paradigm, the decision maker is called an agent and everything outside of the agent is termed the environment. The agent and environment interact continually at discrete time steps, at each of which the agent selects actions, and the environment responds to these actions by providing information about its state and numerical rewards. RL agents try, through interaction with the environment, to learn a policy (i.e., strategy) that will maximise the long term expected cumulative reward [2]. Rewards represent specific goals for agents in an environment. Where I refer to the value of a state, or state-action pair, this means the expected cumulative sum of reward averaged over all of the possible future states and actions.

Early RL agents used tabular methods to keep track of rewards and attribute them to particular states and actions in the environment. Many tasks, including most real-world problems, have environments that are too complex to tabulate. DRL overcame this obstacle by using deep neural networks to approximate a function mapping states from the environment to action choices for the agent. In other words the policy of DRL agents is based on a deep neural network and can therefore generalise, in quite a narrow sense, to arbitrary environment states.

What would Freud make of today’s RL agents?

Although RL agents have shown great potential, they are still a long way from anything approaching human intelligence (e.g., especially concerning generalisation). Could Freud’s tripartite personality model give us any inspiration?

Id

Id is present from birth and strives for instant gratification by means of avoiding the perception of unpleasure. To me this sure sounds like a reward function. RL agents aim to learn a policy that maximises the expected cumulative sum of rewards (i.e., a pleasure principle), or equivalently minimises the cumulative sum of unpleasure (i.e., negative reward). Just like the id, RL agents are greedy in the pursuit of reward and favour strategies that exploit the reward function at all costs. This is an issue for reward signals that are misspecified (i.e., incorrect objective functions), a common problem in RL [3]. Real life poses incorrect reward signals sometimes too, for example pushing some to spend too much time on social media or watching pornography [4].

There are some notable differences between reward in the RL setting and the id. When reward signals are incorrectly specified in real life it tends to be because humans have learned to modify their environment faster than their brains have evolved to adapt. We’ve created apps that trick our brains into believing we are receiving social gratification and procreating. In the short term this might feel great because the id is fulfilled. Of course instant gratification is rarely satisfying in the long term and it seems reasonable, to me at least, to suggest such apps probably create more problems for the ego further down the line as they increase the conflicts faced (e.g., by causing us to internalise unattainably high superego standards).

Agents that game their reward signals, such as by modifying their environment, are a known problem in AI safety [5]. It has been shown that all RL agents are vulnerable to reward gaming [6], but techniques that train agents based on human demonstration and preferences can help. Everitt et al. [7] identify that the RL paradigm is vulnerable because agents only learn about the value of a given environment state when in that state. Corrupt states (think endlessly scrolling your favorite app) become ‘‘self aggrandising’’ because their true value is not normalised by experiences in other states. Learning from human demonstration (i.e., inverse RL) works because agents observe the actions of a human expert, and in doing so challenge the value of corrupt or gamed states from outside. From the tripartite personality perspective, inverse RL using human feedback can be related to the interplay between id, ego, superego and the real world.

A final thought on the differences between the id and reward is that the basic needs and impulses of humans are multi-objective (e.g., hunger, thirst, anger, sex) and that there seems to be relatively robust mechanisms for prioritising the various needs. Based on my current understanding these mechanisms are anatomical (e.g., see how swallowing is neurologically inhibited when ‘‘oversated’’ [8]) and also relate to our capacity to anticipate how our needs are likely to be met in the future. Individuals who are unaccustomed to having their needs met are less willing to delay rewards and take fewer risks [9,10]. In the RL paradigm multiple-objectives seem less well managed. In part maybe this is because standard RL rewards are far less expressive than the biological hardware supporting human decision making. Typically we ascribe some arbitrary positive rewards to the outcomes we want to encourage, or some arbitrary negative rewards for the outcomes we want to avoid, and then these rewards remain constant over the lifetime of the agent. In RL it is challenging to avoid creating arbitrary and often misaligned equivalences between different objectives.

Since the id can only cause action taking by means of the ego, we are mostly able to consciously (and unconsciously) prioritise and strike compromises between the rewards we want to pursue. Standard RL agents seem to lack the anatomy for this and act more like the primitive id. Perhaps by stepping outside of the standard assumptions, e.g., that the RL agent is outside of the environment, we can build agents capable of mediating between otherwise equivalent and competing rewards. Additionally world models might allow agents to internally represent and anticipate how their needs are likely to be met in the future [11].

Ego

Ego perceives the senses, strikes compromise between the real world and our psyche, and controls motility. Analogously enough the policy of an RL agent, usually represented by a deep neural network, also perceives the senses (i.e., the state of the environment) and controls movement (i.e., chooses actions). Still, any mediation between competing objectives is owing to the learning algorithm used to train the agent. In contrast with ego, the policy of an RL agent is usually trained on a specific task and then remains stationary during execution of the task. RL policies are very poor at generalising between even slight variations of the environment used to train the policy. Complex tasks contain far more states that the agent could ever explore during training, and policies are consequently biased by the exploration-exploitation tradeoff determined by the RL algorithm. The ego too is surely biased by the tiny fraction of the real world most get to experience.

That the ego relates most closely to the training algorithm of an RL agent, rather than its policy, I think highlights one of the biggest RL limitations. Compared to the human mind, today’s RL policies are extremely fragile and possess no ability to generate abstract principles that can be applied to other tasks. An RL agent can be a world-class Chess player yet remain incapable of winning even a single game of pong. A training algorithm can be used to retrain the policy for each new task but, as little-to-nothing is carried over, this is a highly inefficient process. I’m not sure the tripartite personality model can help much here as it seems we currently lack the artificial neural machinery needed for scalable real-world generalisation based on abstract reasoning. Causal RL [12] looks very promising in this regard but scalability is limited by the requirement for accurate causal models to be established a priori.

Superego

Superego represents morality, manifests as criticism, and gives lifelong expression to the influence of our parents and society. Clearly the superego relates to AI ethics, but reward is the only essential mechanism for expressing goals, preferences, and values in the RL paradigm. Getting RL agents to respect moral or other constraints both during and after training is a difficult problem in AI safety [5]. Communicating unethical or prohibited actions using the reward function is undesirable because this will need to be discovered by taking those actions during training, and it will be necessary to ascribe a specific (negative) utility to each unconscionable outcome. Then again maybe this isn’t so different from how criminal sentencing policies work. Still, it doesn’t seem right that reward comprises both the id and the superego. In the absence of any real biological needs (i.e., predicated on an evolutionary axiom) all goals are essentially arbitrary and non-moral, I suppose this can only lend itself to one fewer source of conflict for the RL ego.

Final Thoughts

So what, if anything, can Freud’s tripartite model of personality teach us about designing better RL agents?

I think the comparison between the ego and RL learning algorithms is a fair one. While the human ego must contend with three sources of conflict (the id, superego, and real world), RL learning algorithms have the seemingly easier job of mediating between only reward and the environment. The standard RL paradigm does not permit separation between moral values and basic needs, instead only the maximisation of reward and managing the exploration-exploitation tradeoff characterise the ‘‘personality’’ (i.e., policy) of agents. Representing goals numerically grants RL agents tremendous power to discover ‘‘surprising’’ and novel strategies for accomplishing objectives, but it makes the task of aligning them to our values very difficult.

The superego cannot be distinguished in the standard RL paradigm. Perhaps we need Freudian Markov Decision Processes (FMDPs) defined by a tuple \((\mathcal{S},\mathcal{A},\mathcal{R},\mathcal{P},\mathcal{F})\) where \(\mathcal{S}\) is a finite set of states, \(\mathcal{A}\) is a finite set of actions, \(\mathcal{R}: \mathcal{S}\times \mathcal{A} \rightarrow \mathbb{R}\) is a reward function, \(\mathcal{P}: \mathcal{S}\times\mathcal{A}\times\mathcal{S} \rightarrow [0,1]\) is a state transition probability function, and \(\mathcal{F}: \mathcal{S}\times \mathcal{A}\rightarrow [0,1]\) is a Freudian superego function. (Seriously this doesn’t help… )

Bibliography

- Freud, S. (1923). The Ego and the Id. The Standard Edition of the Complete Psychological Works of Sigmund Freud, Volume XIX (1923- 1925): The Ego and the Id and Other Works, 1-66. (Online)

- Sutton, R. S., & Barto, A. G. (2018). Reinforcement learning: An introduction (2nd ed.). The MIT Press. (Online)

- Amodei, A. et al. (2016). Concrete Problems in AI Safety. ArXiv. (Online)

- Astral Codex Ten. (2023). What Can Fetish Research Tell Us About AI?. (Online)

- Leike, J. et al. (2017). AI Safety Gridworlds. ArXiv. (Online)

- Ring, M., Orseau, L. (2011) Delusion, survival, and intelligent agents. In Artificial General Intelligence, 11–20.

- Everitt, T. et al. (2017). Reinforcement Learning with a Corrupted Reward Channel. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence AI, 4705-4713. (Online)

- Saker, P. et al. (2016). Overdrinking, swallowing inhibition, and regional brain responses prior to swallowing. Proc Natl Acad Sci USA, 12274-12279. (Online)

- Acheson, A. et al. (2019). Early life adversity and increased delay discounting: Findings from the Family Health Patterns project. Exp Clin Psychopharmacol, 153-159. (Online)

- Lloyd, A. et al. (2022). Individuals with adverse childhood experiences explore less and underweight reward feedback. Proc Natl Acad Sci USA. (Online)

- Ha, D., Schmidhuber, J. (2018). World Models. ArXiv. (Online)

- Causal Reinforcement Learning (CRL). (2020). Website. (Online)